An updated guide to Serilog.Sinks.Seq

The workhorse Serilog.Sinks.Seq package has a history going right back to the very first production Serilog versions. I've written a lot about its various features as they've appeared, but with that information spread across many blog posts, GitHub issues, and tweets, it's worth stepping back for a moment to review the current feature set and how to get the most out of this little package.

What does Serilog.Sinks.Seq actually do?

The sink sends log events from Serilog over HTTP (or HTTPS) to a central Seq log server.

Behind the scenes, the sink collects events into batches to reduce network round-trips, manages oversized events and other events rejected by the server, and has a number of options for dealing with network failures.

If a logging level is assocated server-side with the API key used when connecting to Seq, the sink can also filter out events client-side, and even control the main Serilog logger's minimum level, to conserve network bandwidth or increase the amount of information available for debugging.

The defaults

With only a serverUrl and optional apiKey specified, the sink works well for most apps, with all versions of Seq (right back to 1.0!):

Log.Logger = new LoggerConfiguration()

.WriteTo.Seq("http://localhost:5341", apiKey: "hh74tjva8a8jfdsnl23n")

.CreateLogger();

The values used for things like batching time and size limits are designed to perform well and work within Seq's server-side defaults.

(To save space, we'll leave out the API key in the remaining code samples.)

Saving bandwidth

Since Seq 3.3, the server-side ingestion API has accepted a new JSON format for events that cuts down payload size, especially when events are small.

Adding compact: true switches to this format:

.WriteTo.Seq("http://localhost:5341", compact: true)

It's a quick little win, if the version of Seq you're using supports it.

Managing network and server outages

How should log messages be handled if Seq is inaccessible? There are a few ways to look at this, and the right approach will depend a lot on the kind of application you're building, as well as the network you're operating on.

By default, Serilog.Sinks.Seq will use an in-memory queue to keep log events around while connectivity is reestablished. What are the trade-offs? Events will be lost in this configuration if the client app is restarted during the outage. Events will also be lost if the duration of the outage exceeds the buffering time applied by the sink (~10 minutes), or if the number of events exceeds the maximum queue length that the sink enforces (100,000). These measures are intended to prevent unbounded memory growth - log events can pile up quickly, so the sink needs to be careful not to let this get out of hand.

The defaults make sense for most applications and networks: log data is important, but the desires for system stability and predictable resource usage justify the risk of occasionally losing a small number of events.

If your network is less reliable, or your tolerance for event loss is lower, Serilog.Sinks.Seq provides durable log shipping. Events are written to a buffer file, and a background thread ships the contents of the file to Seq. Specifying the buffer filename turns on durable mode:

.WriteTo.Seq("http://localhost:5341", bufferBaseFilename: "logs/my-app")

The buffer file survives restarts, so longer and more frequent network or server outages can be handled without event loss. The bufferSizeLimitBytes argument can be used to cap the amount of disk space used by the buffer.

Durable log shipping does come with a manageability cost: if an event can't be sent to Seq, for one reason or another, it will be written out to an invalid-* file alongside the log buffer. In the spirit of "durability", these invalid payload files won't be deleted by default; you should monitor for them and investigate/remove them periodically. Invalid payloads can be cleaned up automatically by specifying retainedInvalidPayloadsLimitBytes.

There's one important limitation of durable log shipping: the buffer file can only be used by one process at a time. If multiple instances of the app run concurrently, they can't use the same buffer file. In these situations, Seq Forwarder is a better solution.

Controlling log levels

All Serilog sinks can receive a subset of the log stream, and the Seq sink supports this, too, through the restrictedToMinimumLevel parameter:

.WriteTo.Seq("http://localhost:5341", restrictedToMinimumLevel: LogEventLevel.Information)

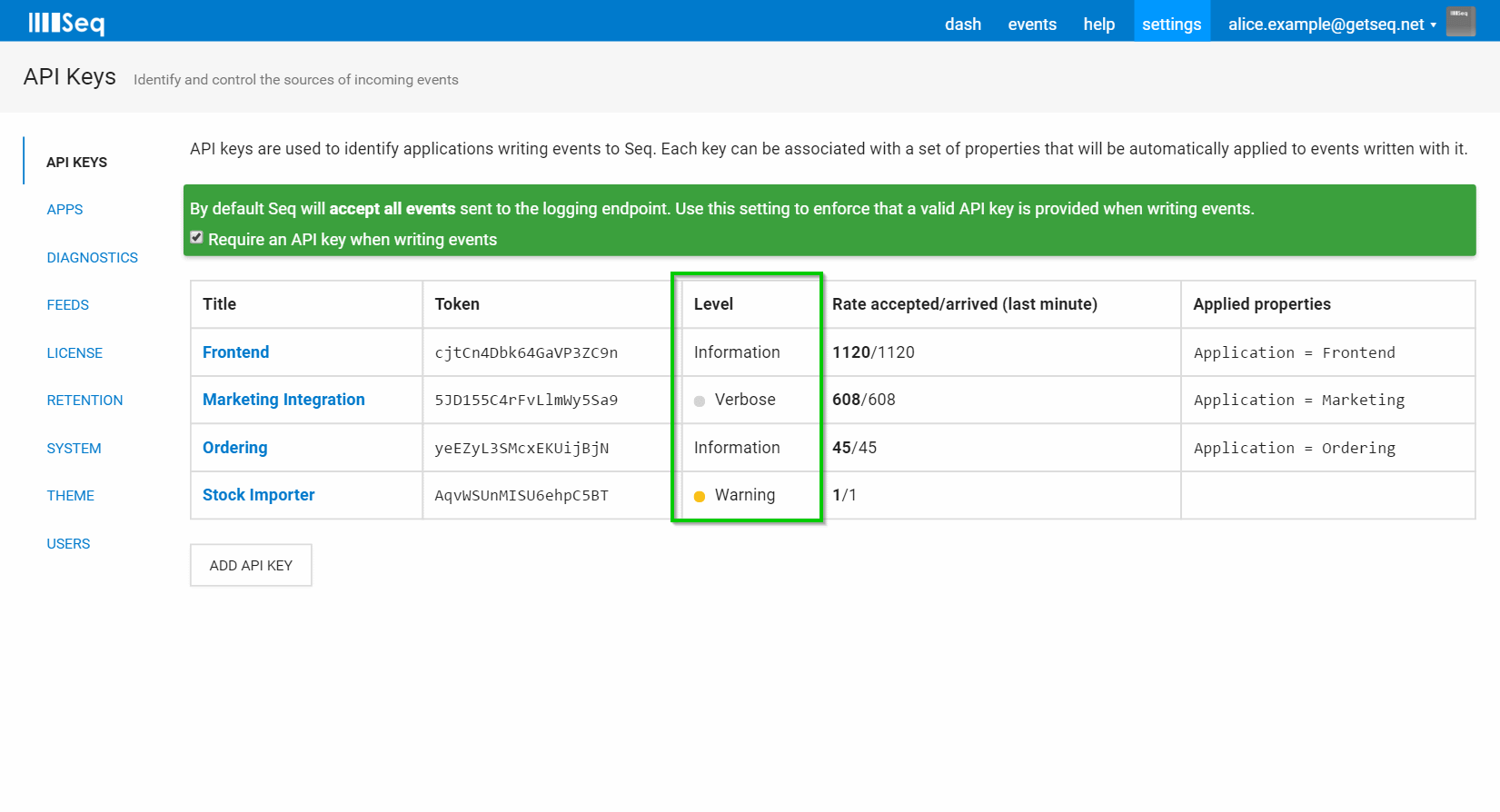

There are a few places where this is useful, but it's not always necessary since the level can be set on the Seq server through the API key:

Under the default configuration, the server will pass this value down to the sink in responses when any events are logged, and the sink will perform client-side filtering to improve network and server performance.

This is great for subtractive filtering, but what about giving a part of the system a logging boost? Filtering the event stream won't help if the events aren't created in the first place, and for this, the Serilog pipeline's minimum level needs to be modified.

Serilog.Sinks.Seq has an efficient answer to this. First, instead of setting a fixed minimum level for the pipeline, a Serilog LoggingLevelSwitch is used:

var levelSwitch = new LoggingLevelSwitch(LogEventLevel.Information)

Log.Logger = new LoggerConfiguration()

.MinimumLevel.ControlledBy(levelSwitch)

// ...

Now, changing the levelSwitch.MinimumLevel property turns the Serilog logging level up or down. Instead of doing this by hand, the level switch is passed through to the Seq sink, which sets the level based on the value from Seq:

.WriteTo.Seq("http://localhost:5341", controlLevelSwitch: levelSwitch)

Changing the API key's logging level will change the level of the logging pipeline. If there are no events flowing, you may need to wait up to two minutes for a "background level check" to be performed.

Audit logging

Requirements sometimes dictate that a transaction is rolled back if a log event describing it can't be written. For this, Serilog provides AuditTo, and the Seq sink hooks in on top of this:

.AuditTo.Seq("http://localhost:5341")

There are two differences in the sink's behavior in audit mode:

- Failures writing events to Seq will result in exceptions that propagate back out from the logger, and

- Events are sent individually, in synchronous web requests.

Sending events individually increases latency and reduces throughput; this is a typical trade-off to make for audit logging, but it's important to keep in mind when deciding whether auditing is necessary in an application.

Debugging connection issues

Like other Serilog sinks, unless it's used in audit mode, the Seq sink won't throw exceptions back to the caller when logging fails (Serilog implements this policy). To find out what's going on when events stop flowing, enable Serilog's SelfLog:

SelfLog.Enable(Console.Error);

// -> 2017-10-31T00:22:45.6043042Z Exception while emitting ...

There are a few options for collecting SelfLog messages: they can be written to a TextWriter like Console.Error or regular file, or, a custom Action<string> handler can be passed to Enable(). Wherever you send them, make sure the TextWriter or event handler is thread-safe.

Gotcha!

By far the most common problem reported with the Seq sink is this one:

I'm testing out Serilog in a console app - why don't any events appear in Seq?

And nine times out of ten, the issue will be that the app is exiting before the background HTTP request to Seq can complete. Because batched logging is an asynchronous process, you must shut the sink down cleanly using either:

Log.CloseAndFlush();

or:

logger.Dispose();

before your application process terminates. This is good practise in any application using Serilog.

Settings you shouldn't need to touch

Finally, you can increase the event size limit eventBodyLimitBytes to allow larger events through to Seq (there's a corresponding setting on the server that also has to be increased). In some unusual situations, there may be value in logging occasional events with more than a few kilobytes of data.

More often, large events indicate logging problems. Perhaps you tried to serialize an object with Serilog's @ that wasn't designed for serialization? Deep or circular object graphs can generate large payloads, even though Serilog has some safety valves that place an upper bound on event size.

Seq can accept large events, but it's designed to work best with typical log events rather than large application data payloads. Before changing the event size limit, try redesigning the event to capture only the most important information from the associated objects.